- Mar 11, 2022

- 34

- 1,065

The DRM video I'm trying to get is also above 500 MB and the download is getting stuck around 430 MB.

I have just removed the use of aria2c within the yt-dlp command and it seems to work fine so I will create a new release in a moment and see if that has any difference for people.sim0n00ps I saw that your downloader uses aria2c, is it built into the downloader or do you have to download it and set the path? It wasn't mentioned in the readme.

Another thing I noticed,I have just removed the use of aria2c within the yt-dlp command and it seems to work fine so I will create a new release in a moment and see if that has any difference for people.

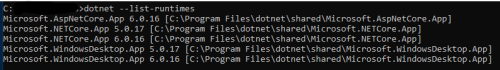

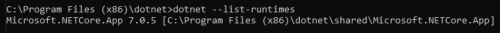

dotnet --list-runtimes uses the 64bit dotnet.exe as default and doesn't list Microsoft.NETCore.App 7.0.5 if it's the x86/32bit version. Probably uses the 32bit dotnet.exe and finds the correct version if no 64bit versions exist. Was a bit confusing when I installed the downloader.

Glad it works, fills me with some confidence lmaoThe new 1.5 version was able to download the video that 1.4 couldn't. Thanks!

Glad it works for you. I can have a look into that.Awesome work sim0n00ps.

The scraper works a treat and is much handier than the python script option.

I'm kicking myself a bit, that I took a punt on TubeDigger but I guess I can still use it for other purposes.

A little minor request if I may, with regard to the functionality of the scraper.

Would it be possible to add an option to go back to the main menu after a successful scrape, rather than having to exit the program and then launch it again.

I know you can select multiple OF accounts to scrape in one go, but on the off chance that I want to do individual scrapes, said option would be good.

lol, I was coming here to request this. We need an option to download by date range. Like if I just re-subbed to someone, I would only want to download the posts from like the last 3 weeks or so.sim0n00ps An option or hardcoded change to start scraping at the latest wall post?Right now I would have to download 70GB again for some models to get the newest post.

sim0n00ps An option or hardcoded change to start scraping at the latest wall post?Right now I would have to download 70GB again for some models to get the newest post.

I'll make it so it goes newest first, give me a few minslol, I was coming here to request this. We need an option to download by date range. Like if I just re-subbed to someone, I would only want to download the posts from like the last 3 weeks or so.

One thing I will say though is try and avoid too many user configurable settings. I think this is part of why DigitalCriminals script has so many issues, people can configure it in so many different ways and its impossible for him to test every configuration. Obviously some might be necessary and shouldnt cause issues like adding dates to the start of filesWe need an option to download by date range.

Looks like it's working with the latest update. Seems good so far, will test with more DRM videos. Thank you for your hard work!Glad it works, fills me with some confidence lmao

sim0n00ps An option or hardcoded change to start scraping at the latest wall post?Right now I would have to download 70GB again for some models to get the newest post.

lol, I was coming here to request this. We need an option to download by date range. Like if I just re-subbed to someone, I would only want to download the posts from like the last 3 weeks or so.

Newest release should now download newest media firstI too would like it to download by latest post first.

One thing I will say though is try and avoid too many user configurable settings. I think this is part of why DigitalCriminals script has so many issues, people can configure it in so many different ways and its impossible for him to test every configuration. Obviously some might be necessary and shouldnt cause issues like adding dates to the start of files

Many thanks mate.Glad it works for you. I can have a look into that.

I may be mistaken but I thought I read somewhere that there is a setting in the scraper that automatically skips existing/already downloaded content?sim0n00ps An option or hardcoded change to start scraping at the latest wall post?Right now I would have to download 70GB again for some models to get the newest post.

It does currently skip dupe content, however b0b is suggesting that he doesn't want to download 70GB of stuff he doesn't already have downloaded just to get the latest posts from a user hence why I've made it so newest posts are downloaded first.Many thanks mate.

I may be mistaken but I thought I read somewhere that there is a setting in the scraper that automatically skips existing/already downloaded content?

What is the structure you have if you don't mind me asking, as long as it follows the structure like Posts/Free/Videos etc somewhere in your structure then it might be a case of letting you change what the root folder is from __user_data__ to whatever you want. However if its completely different that's where it might be a little difficult as I don't want to overload the auth.json file with loads of different values people can change because that might lead to problems as mentioned above.sim0n00ps Would it be possible to customize the downloaded folder structures? It's not a huge priority, but it would be nice for me to be able to have it structured the same as my settings on DC's old scraper. Thanks.

It just removes the Free and Paid folder and just combines them. So instead of like Messages/Free/Videos it's just Messages/Videos and everything free or paid goes in the same folder.What is the structure you have if you don't mind me asking, as long as it follows the structure like Posts/Free/Videos etc somewhere in your structure then it might be a case of letting you change what the root folder is from __user_data__ to whatever you want. However if its completely different that's where it might be a little difficult as I don't want to overload the auth.json file with loads of different values people can change because that might lead to problems as mentioned above.

I'll consider it but probably not one of the things that will make it on the priority list right nowIt just removes the Free and Paid folder and just combines them. So instead of like Messages/Free/Videos it's just Messages/Videos and everything free or paid goes in the same folder.